Gemini / Android — Unifying Research to Define the Future of On-Device AI

Client: Google - Gemini UXR

Duration: September - December 2024

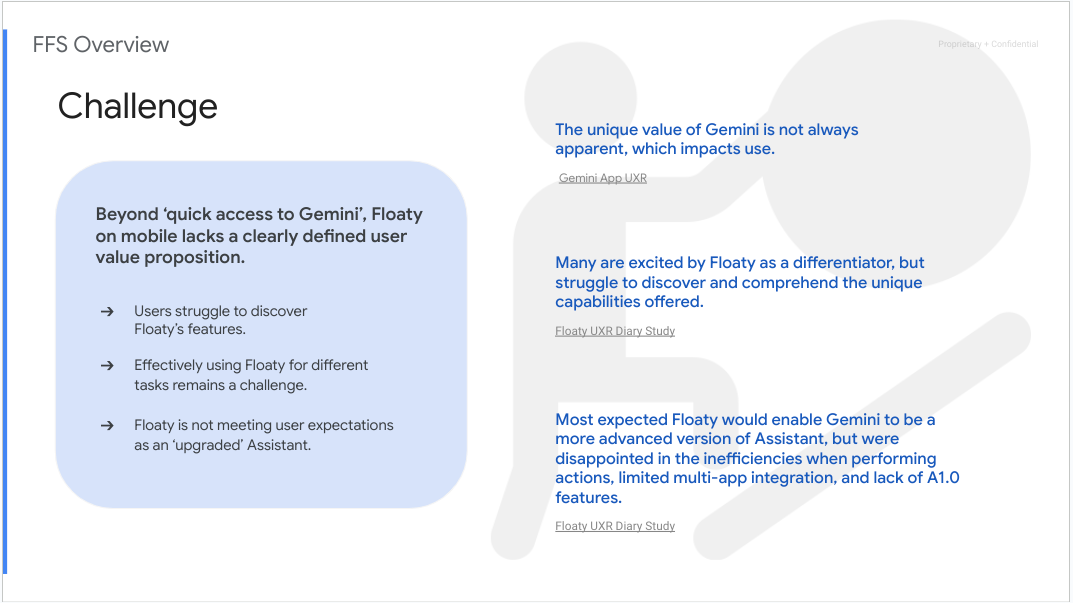

Challenge

Product managers needed a unified view of user pain points, expectations, and opportunities to increase adoption of Floaty, Gemini’s on-device entry point. Multiple teams had explored the feature in isolation, but there was no shared understanding of how users interacted with it across different contexts or devices.

Approach

I designed and led a three-phase project to synthesize fragmented insights and guide product direction:

Conducted an internal audit of all existing research related to on-device AI and Floaty interactions.

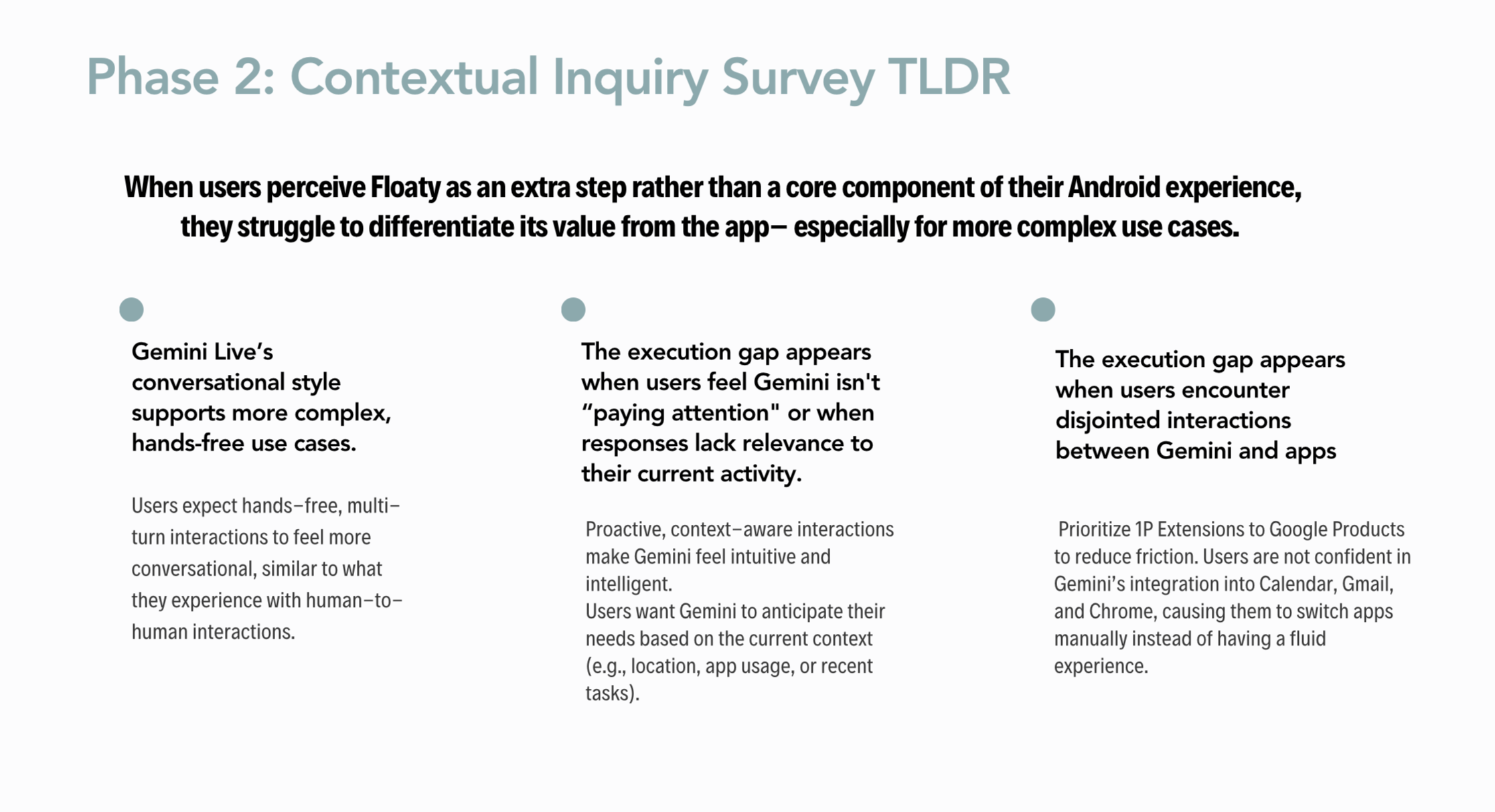

Performed a contextual analysis of qualitative data to identify consistent themes across studies.

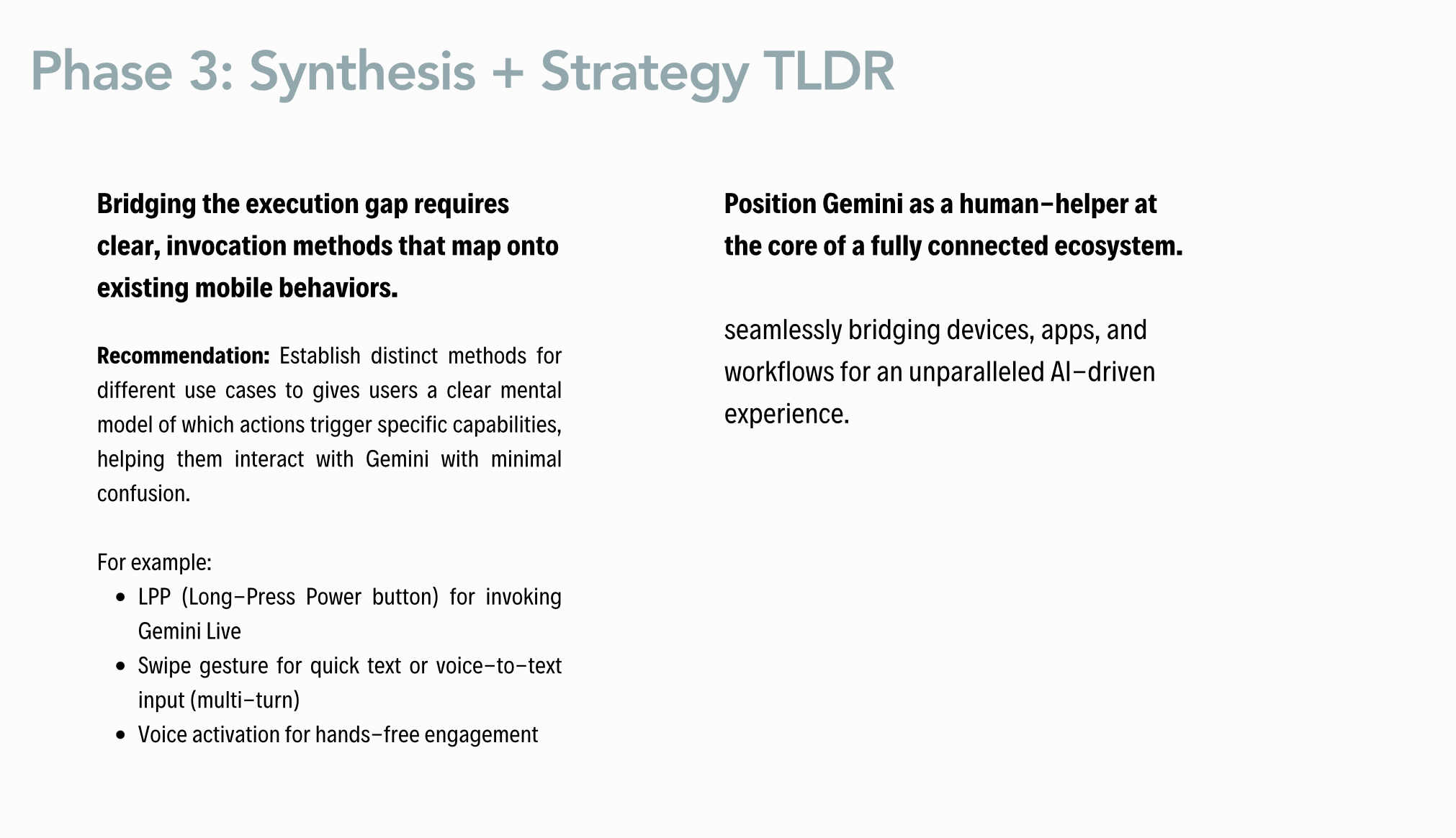

Developed a strategic recommendation for a long-press, voice-first experience — replacing the swipe-up gesture — to better align with user behavior and accessibility.

Throughout the analysis, I uncovered an execution gap: users were excited about the potential of an AI assistant but often didn’t know what steps to take to achieve their goals. There was tension between providing too many prompts and respecting users’ desire for organic discovery — a challenge unique to AI interfaces, where capabilities aren’t always visible.

Outcome

The research clarified user expectations and shaped a new product direction focused on surfacing contextual entry points and natural discovery moments aligned with user intent. The framework helped unify teams around a shared understanding of AI adoption barriers, ensuring future Gemini integrations are both intuitive and discoverable.